The Rhizomic (Memory) Collection: A Non-Linear Approach to the Preservation of Video games, Game consoles, and their data.

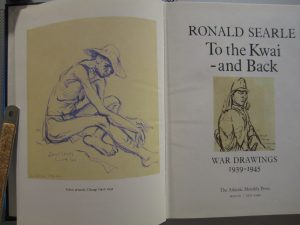

The seminar “The Research Collection” has, in a way, encouraged me to reflect on my book and music collecting habits. To assess memories, I have preserved in the corners of my mind. To assess the value and relevance I place upon them. For the first time, I considered myself a collector of memories, not only of objects. If collecting is not limited to physical objects but also encompasses all sorts of memories; then, objects are a means to gain experiences that, in turn, will leave in us printed memories. Such aspects function at the personal level; however, what happens regarding the collection of objects at the University and Museum level? Sheila Brennan claims in “Making Room by Letting Go” that the purpose of collecting is to gather “the material culture evidence that will help historians interpret the lives of early 21st-century Americans.” The aim is clear: to gather as much relevant information as possible on a given object. Museums and Universities actively collect material heritage. Nevertheless, as Raiford Guins notes in “Museified,” museums have limited forms of access, for they let objects fall into oblivion (66). Some Universities, on the other hand, lack expert knowledge on the preservation of artifacts (Brennan). Then, in what ways can scholars and university students can aid in the preservation of cultural heritage? The purpose of this study is to present a non-centered approach for (1) collecting, (2) making accessible and (3) preserving material heritage based on Gilles Deleuze and Félix Guattari’s rhizome. Using such an approach I mean to gather software, hardware, design information, anecdotes and other relevant data used in game consoles and cartridges. By rhizome, let it be understood “an ascentered, non-hierarchical, nonsignifying system without a General and without an organizing memory or central automaton,” (21) as Deleuze and Guattari introduce it in “A Thousand Plateaus: Capitalism and Schizophrenia” I will begin by developing the notion of destabilizing the centrality of the object to map “assemblages” (connections.); then, I will move on to the process of mapping collective assemblages. I will finalize presenting a collaborative approach to the preservation of cultural heritage.

1 Collecting by Destabilizing the Centrality of the Object

In my experience, while preparing probes one and two for the Game Boy Classic and Tiny Toon Adventures Montana’s Madness gameplay, I found useful to approach each of these two objects using the question What does it function with? A question, Deleuze proposes in the Rhizome, with the intention of destabilizing the centrality of the object of study. The question “what does it function with” shatters the linear unity of the object. What is more, by placing the object inside an assemblage or multiplicity the Game Boy situates itself “at a higher unity, of ambivalence or overdetermination.” (6) Moreover, mapping the trajectory of the object allows me to stop considering the Game Boy as an object with which to play video games. Instead, I begin to consider the Game Boy as an event, an event which correlates with other events. For instance, when I situate the Game Boy (the event) between the eighties and the nineties the focus lies on the objects the GB echoes.

(Address the Audience)

Tell me, what do you see when you set your eyes on the GB?

(Pause)

Now, let us go back in time, to the year 1988 (one year before the launching of the GB)

Hint: Perhaps, you see an object you carry with you every day, everywhere.

Can you relate GB to two more objects?

Drawing back your attention, Deleuze claims that “any point of the rhizome can be connected to anything other, and must be.” (7). By mapping the first object (GB in this case), you approach other objects. You establish echoes not only from objects used in the past, but also establish echoes from current objects. I call the mapping process “mirroring.” Mirroring allows expanding lines of connection. In a way, the rhizomatic approach fosters relationships between fields (in terms of objects and disciplines of study) given that there is no central point, no actual origin and, above all no linearity, which brings me to my second point.

(A quick recap:

- A non-linear analysis of GB.

- Two (of many) lines of thought which can be further explored regarding the objects the GB mirrors, to start off.)

2 Making Accessible Encoded Knowledge/Mapping Collective Assemblages

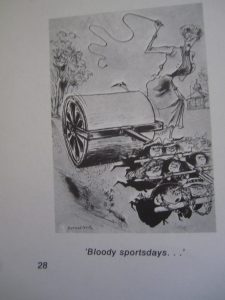

Oversignifying brings about the collapse of the rhizome. In Deleuze’s words “a rhizome may be broken, shattered at a given spot, but it will start up again on one of its old lines, or on new lines.” (9) Now, let me show you (the audience) a map of a new rhizome on the GB, but whose focus is to place the GB, not as an event, but as a generator of experiences. Henry Lowood (qtd in Guins) establishes that experiences are “generated by a framework of rules, codes or stories and expressed through interaction, competition, or play.” (32) This type of rhizome requires help from scholars and students from disciplines other than Media Studies or English Literature to map “collective assemblages.” On the one hand, there is the technical system (matter) of the GB, which, when analyzed, makes visible the assembly language, commands, timings, and opcodes. To map these assemblages in the rhizome, students of Computer Science and scholars knowledgeable of such codes might work together to prepare and establish correlations with other objects which share (or not) similar technical systems. On the other hand, there is the gamer (actor) whose interaction with the game (as purchased) establishes a given number of screen memories (fragments) while playing the game. Moreover, at a metagame level, the gamer can also modify the technical system of the game to create a “unique” game. Hence, the gamer (player) becomes more than a consumer, becomes a game designer. By rearranging a couple of elements, the game designer produces a different output. Each of these members shares and submits their own generated experience be it by playing the game or by designing a game of their own. Experience and experimentation become visible herein, which brings me to my third point.

(A quick recap:

- Both scholars and students combine maps to represent the technical system of the object (GB in this case).

- There exists a sense of community before, during and after the mapping of the technical system of the object.)

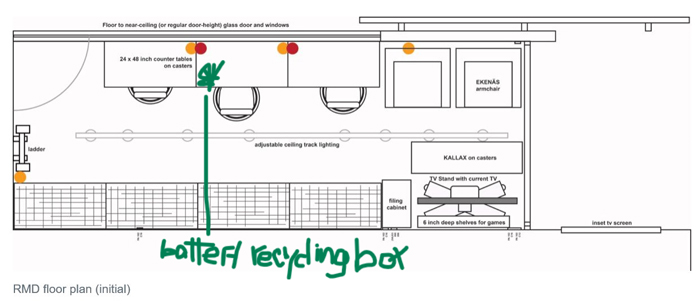

3 Preserving Material Heritage

Regarding “forms of access” and forms of preservation. Sheila Brennan suggests in “Making Room by Letting Go” that institutions should “partner with other arts organizations to use collections in new ways.” Under the same premise, I would add the creation of a website in partnership with other universities. The collaborative creation of the website paves not only the ground for a major form of accessibility of information but also ensures the commitment of other institutions for the preservation of manuals, pictures, videos and generated experiences from their contributors. Again, since the gathering of knowledge is not centralized but becomes a shared experience, the rhizome seems to be an efficient way, to a certain degree, to preserve cultural capital for the future generations; however, some pitfalls should be considered: they are imaginative, historical, contextual and geographical. Given that once the website is online, database accessible online. The four pitfalls could converge to shift interpretations on the data allocated due to a shift in social and cultural ways of thinking and technological advancement. Therefore, I would suggest that to overcome such constraints, future scholars should encourage a “compare and contrast approach” to establish nuances and shifts in the signifying of objects and their data. Another way would be the preparation of a time capsule in the years to come, in which paper-based information and specific objects are allocated in one of the pillars of each partnered university, like the one located in the cornerstone of the Henry F. Hall Building (in 1967) at Concordia University. By preparing some time capsules, each capsule containing fragments of specific objects, the scholars dwelling in the Halls of Concordia in a hundred years’ time, will rethink and reinterpret such objects on their own terms, in accordance to the schools of thought available at that time. In a way, objects will not lose their relevance due to their study in the centuries to come.

(A quick recap:

- Knowledge is a shared experience.

- A collaborative gathering of collections ensures the accessibility and preservation of collections for cultural heritage.)

To sum up, the present study presents the rhizome as a non-hierarchical approach for the collecting, making accessible and preserving cultural capital, as Gilles Deleuze and Félix Guattari propose in “A Thousand Plateaus: Capitalism and Schizophrenia.” First, by studying the object through a non-linear analysis establishes connections among objects beyond definite historical times. Second, due to a lack of central automaton, the rhizome (as another approach to collect information) promotes collaborative work between both scholars and students, who blend maps of representation (data). Hence, they share personal experiences and knowledge, which in turn leads to ensuring the accessibility and preservation of collections using a digital repository.

(If time allows it!) (Please, meditate on the following train of thought: in the rhizome, there is not centrality, but multiplicity; there is not one entry point, but endless entry points to the study of objects; there is not a process of tracing of memories and objects; instead, there is “letting go” as part of the collecting process.)

Works Cited

Brenan, Sheila. “National Trust for Historic Places: Return to home page.” Making Room by

Letting Go: A Look at the Ephemerality of Collections – Preservation Leadership Forum

– A Program of the National Trust for Historic Preservation,

forum.savingplaces.org/blogs/special-contributor/2014/08/12/making-room-by-letting-

go-a-look-at-the-ephemerality-of-collections.

Deleuze, Gilles, and Felix Guattari. “Introduction: Rhizome.” A Thousand Plateaus. 11th ed. Minneapolis: U of Minnesota, 2005. Print.

Guins, Raiford. Game After: A Cultural Study of Video Game Afterlife. MIT Press, 2014. Print.